In recent months, there has been a surge of news surrounding artificial intelligence systems, with specialized websites and video hosting platforms brimming with content on ChatGPT and Midjourney. While developers eagerly share examples of code generated by the Copilot AI Assistant.

This increased attention has not gone unnoticed by corporations, as Google and Microsoft have both expressed concerns and shown interest in these new language models. Google fears that these models may disrupt their position in the market. While Microsoft plans to invest a hefty $10 billion in the developer company behind ChatGPT.

Neural networks are integrated in many projects

Neural networks are already being integrated into various software projects, including text editors, browsers, and cloud platforms. However, utilizing these machine learning models requires substantial computing resources. Often incurring high costs reaching hundreds of thousands of dollars on a daily basis.

In light of this, there is a growing interest in exploring more accessible alternatives, such as “fog computing”. Fog computing is a type of cloud computing where data processing occurs not in a centralized data center. But in a distributed network of devices. Market Research Future estimates that the fog computing market is projected to reach $343 million by 2030. And as a result, there are emerging solutions for deploying machine learning models in a peer-to-peer (P2P) format, making them more accessible and cost-effective.

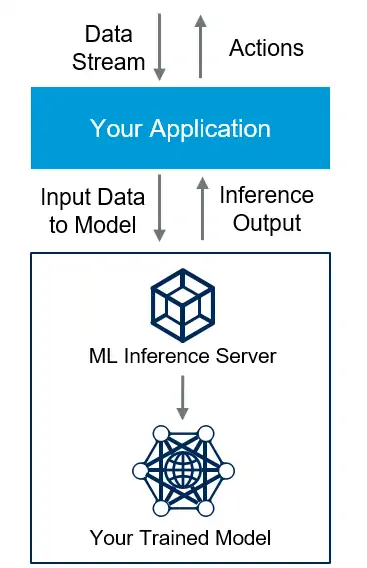

The illustration depicted above illustrates the typical functioning of a server for machine learning model inference. Which involves the continuous operation of neural networks on end devices. In a unique approach, users have the ability to provide resources to each other for training machine learning and inference models in a torrent-like format.

While some users may share resources on a charitable basis to contribute to the community. It is more common for them to receive incentives and benefits in return. These can include accelerated calculations and priority access to running machine learning models. Many peer-to-peer (P2P) platforms are built on blockchain technology. Offering hosts rewards in the form of tokens as an incentive for their participation.

Deploying language models in BitTorrent format

A notable example of a platform that enables peer-to-peer (P2P) deployment of machine learning (ML) models is Petals. This innovative project is built on the BLOOM-176B language model, which is an open-source text generation model. BLOOM-176B boasts an impressive 176 billion variables that determine how input is transformed into desired outputs, surpassing the capabilities of GPT-3 in this regard.

BLOOM (BigScience Large Open-science Open-access Multilingual Language Model) is an autoregressive language model (LLM) that can generate text continuations from a given seed in 46 different languages, including 13 programming languages. Unlike well-known LLMs such as GPT-3 from OpenAI and LaMDA from Google. BLOOM is supported by volunteers and funded by the French government, adhering to its original goal of working for the benefit of society.

In the Petals platform, users can download a small part of the BLOOM model and collaborate with others who maintain different parts of the model for inference or fine-tuning purposes. The inference speed of Petals is impressive, with approximately 1 second per step (token). Making it suitable for interactive applications such as chatbots.

It may not be feasible to replicate this approach with Midjourney, as Open AI has shifted towards more commercial activities, deviating from its original mission of open collaboration for societal benefit. However, in theory, it may be possible to explore decentralized projects like Folding with Stable diffusion. Which is an open-source model, for similar purposes.

Decentralized solutions for machine learning

One noteworthy project in this field is PyGrid, which is designed for data scientists and tailored for federated machine learning. In this approach, ML models are trained on local data samples using peripheral devices or servers. PyGrid is built on the PySyft library, serving as a wrapper for popular machine learning frameworks like PyTorch, Tensorflow, or Keras.

It consists of three main components:

- Network, which is responsible for monitoring and routing instructions

- Domain, for storing data and federated learning models

- Worker, which includes a set of compute instances.

In addition to projects focused on decentralized inference, there are also solutions being developed for distributed learning. One example is the iChain application proposed by a group of engineers in the United States. Which is based on blockchain technology.

From the user’s perspective, iChain operates in a straightforward manner. Users upload a file for machine learning processing to a dedicated directory. Create a request for processing, and select a provider of computing resources and a validator. The validator evaluates the completed file and passes it back to the user. For capacity providers, the process is equally simple – they register on the network, run the application, receive tasks for processing, and are rewarded with tokens.

With the iChain application, users can pay capacity owners to perform machine learning tasks. Avoiding the need to rely solely on their own computers. The application utilizes the Ethereum blockchain for security, decentralization, and transactional payments, providing a robust platform for distributed machine learning.

Customized solutions for individual needs

Stable Horde is a unique crowdsourced project that unites volunteers to create images using Stable Diffusion. By simply offering your video card and installing the client, you can generate content on other GPUs for free. As a registered user, you also have the opportunity to vote for the best-generated images. And earn a rating that influences the speed at which your tasks are processed.

Another noteworthy example is the Hivemind library, which leverages the aforementioned Petals project. It is specifically designed to train large models on hundreds of computers contributed by universities, companies, and volunteers. This collaborative approach enables a customized solution tailored to individual needs.

Addressing challenges and innovations in AI

Decentralized platforms offer a cost-effective, if not entirely free, alternative to paid services for text, code, and image generation. However, these peer-to-peer (p2p) platforms for training machine learning (ML) models do come with limitations, particularly in terms of information security.

The processing of user data on numerous devices in distributed platforms poses challenges for ensuring robust security measures. While encryption mechanisms can be employed to protect data during transfer and storage. There are inherent risks associated with hosts accepting data and code from other sources. Which could potentially compromise the integrity of their system.

Containerization may offer a solution to mitigate such risks, where applications are encapsulated within containers and executed in a “sandboxed” environment. This can minimize the impact of potentially malicious code on the host’s computing system. For instance, leveraging Kubernetes as a service can ensure that the management plane remains within the cloud provider’s domain of responsibility, enhancing security.

Another important consideration is the potential for algorithms to generate “toxic” content. Which may be more challenging to control in a p2p environment compared to a centralized setup. Developers of machine learning models need to be mindful of this and incorporate appropriate disclaimers and safeguards. As demonstrated in the Petals repository.

Also, Addressing data privacy, security, and content generation concerns in decentralized AI platforms requires thoughtful policies and measures. And ongoing innovation in the development and deployment of machine learning models.

Conclusion

Decentralized machine learning platforms offer innovative and cost-effective solutions for text, code, and image generation tasks. However, they also present challenges related to information security, data privacy, and content generation. Containerization and encryption mechanisms can help mitigate security risks. And developers need to be cautious about potential “toxic” content generated by algorithms in a peer-to-peer environment.

In conclusion, while decentralized machine learning platforms offer promising opportunities for democratizing access to AI capabilities, careful consideration of security measures, data privacy policies, and content generation safeguards is crucial. In addition, ongoing innovation and collaboration among developers, users, and stakeholders are essential to address these challenges and ensure the responsible and ethical use of AI in decentralized environments. By proactively addressing these concerns, decentralized machine learning platforms can unlock their full potential and enable widespread access to AI capabilities while maintaining robust security and privacy standards.